First Robotics Competition 2024

2024 | Completed

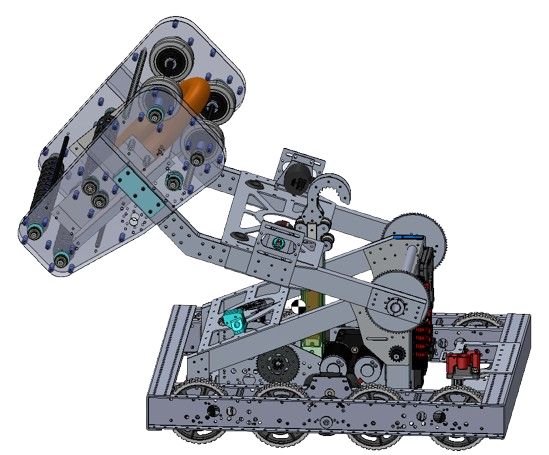

The 2024 FRC game involved

scoring rings into different-sized goals.As the team's lead programmer, I managed a team of 6 to ensure all subsystems were complete.

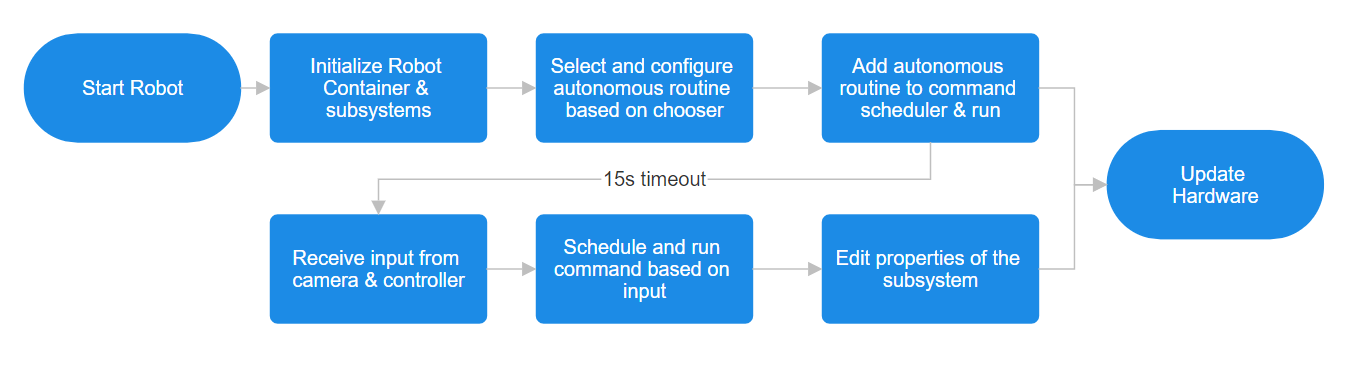

Code Structure:

The robot ran on a command/subsystem based routine.

See the code!

Autonomous Routine:

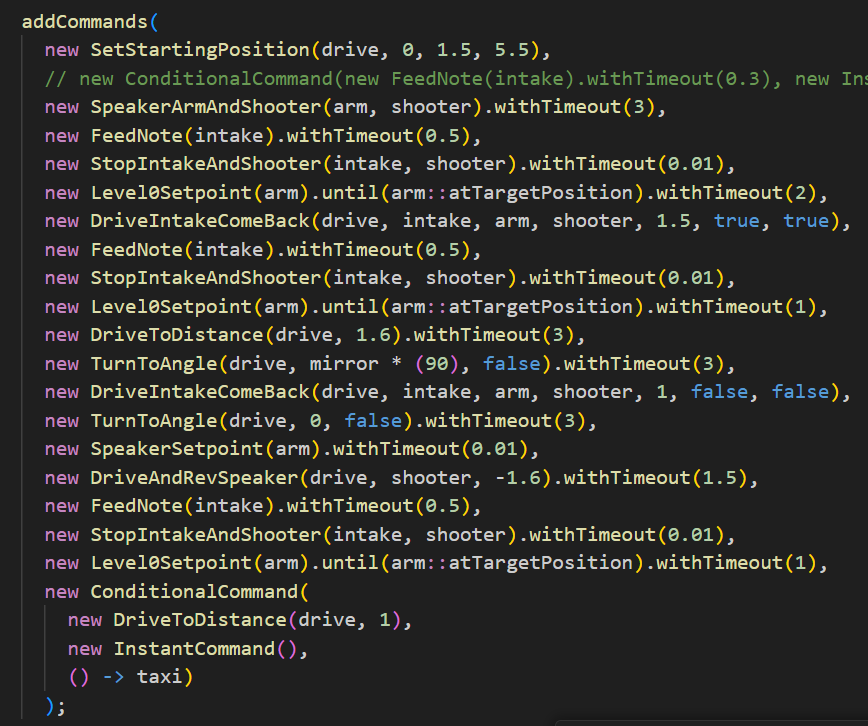

The first 15 seconds of the match, the robot is completely autonomous, meaning it runs a pre-programmed routine. These routines are complex combinations of many simple commands such as turning a certain angle, lifting the arm to a certain position, or spinning the shooter to a certain speed. These base commands are combined sequentially or in parallel to create more complex, useful commands. This is a routine I wrote that scores 3 game pieces.

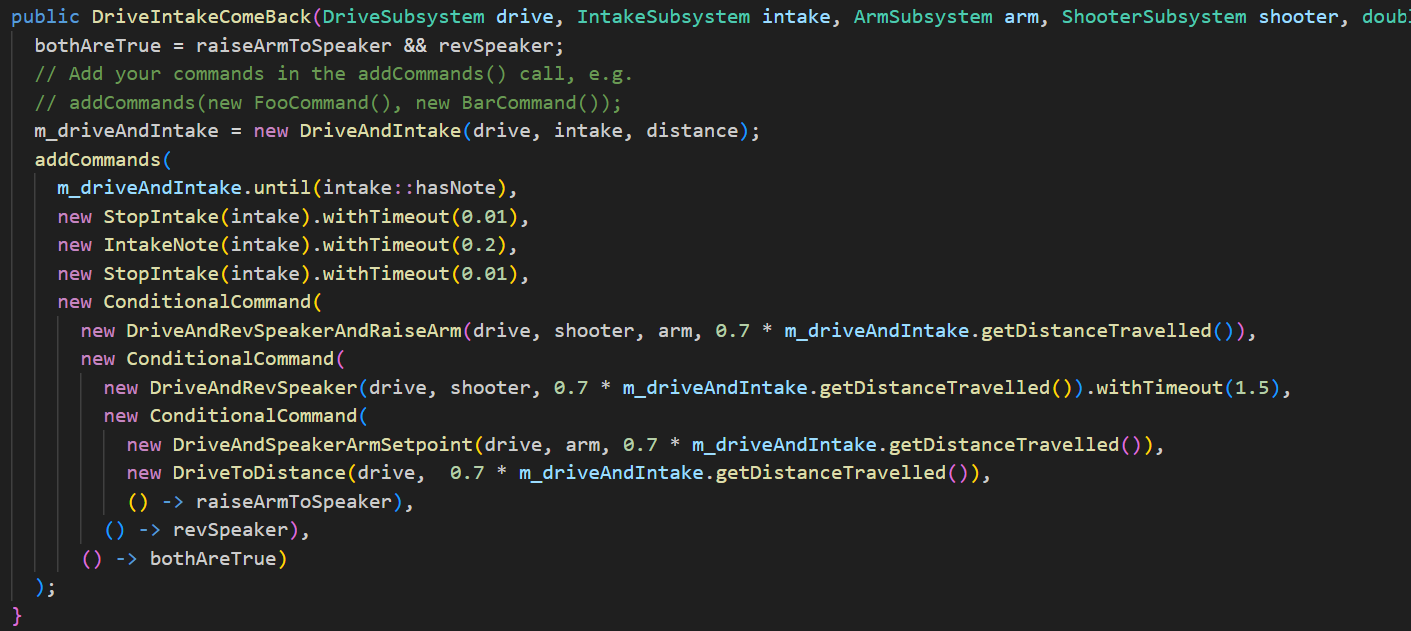

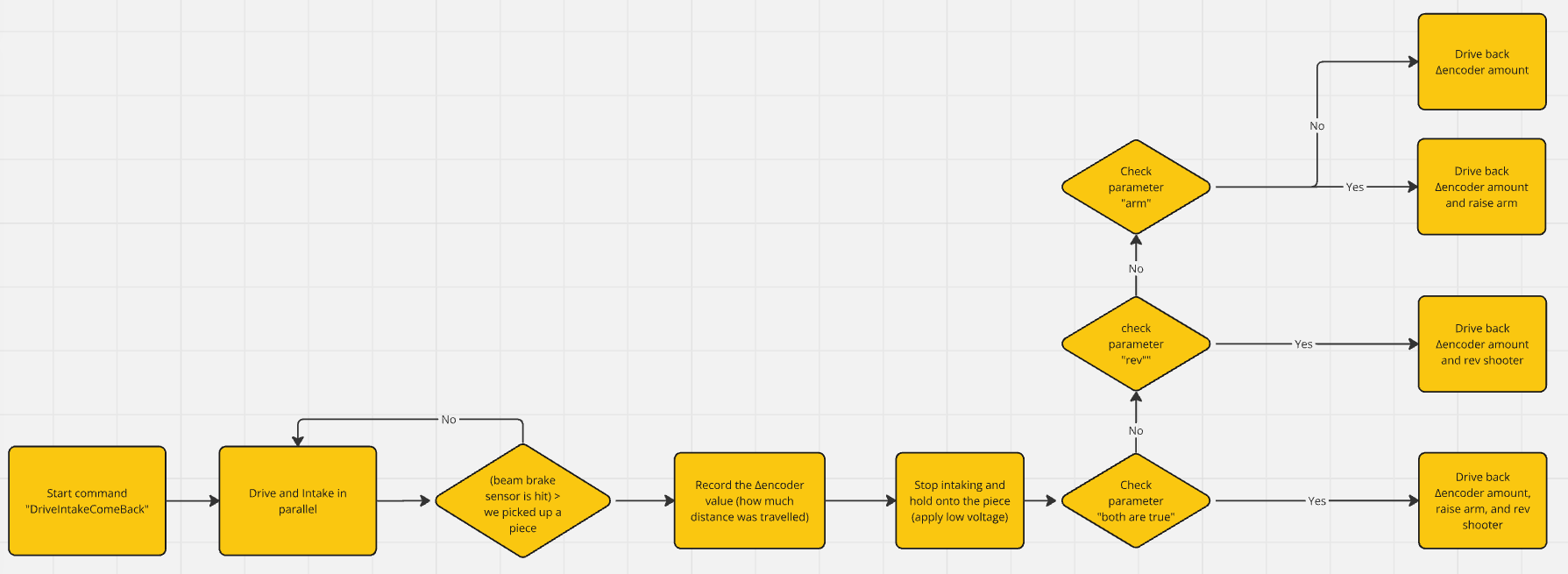

All of the commands are custom classes, meaning this robot project had over 65 commands. Here's the specific command that drives backwards until it detects a piece, and then drives forward the same amount:

This command will do slightly different things based on the arguments when it is called. This allows it to be reused in different contexts.

Vision Processing:

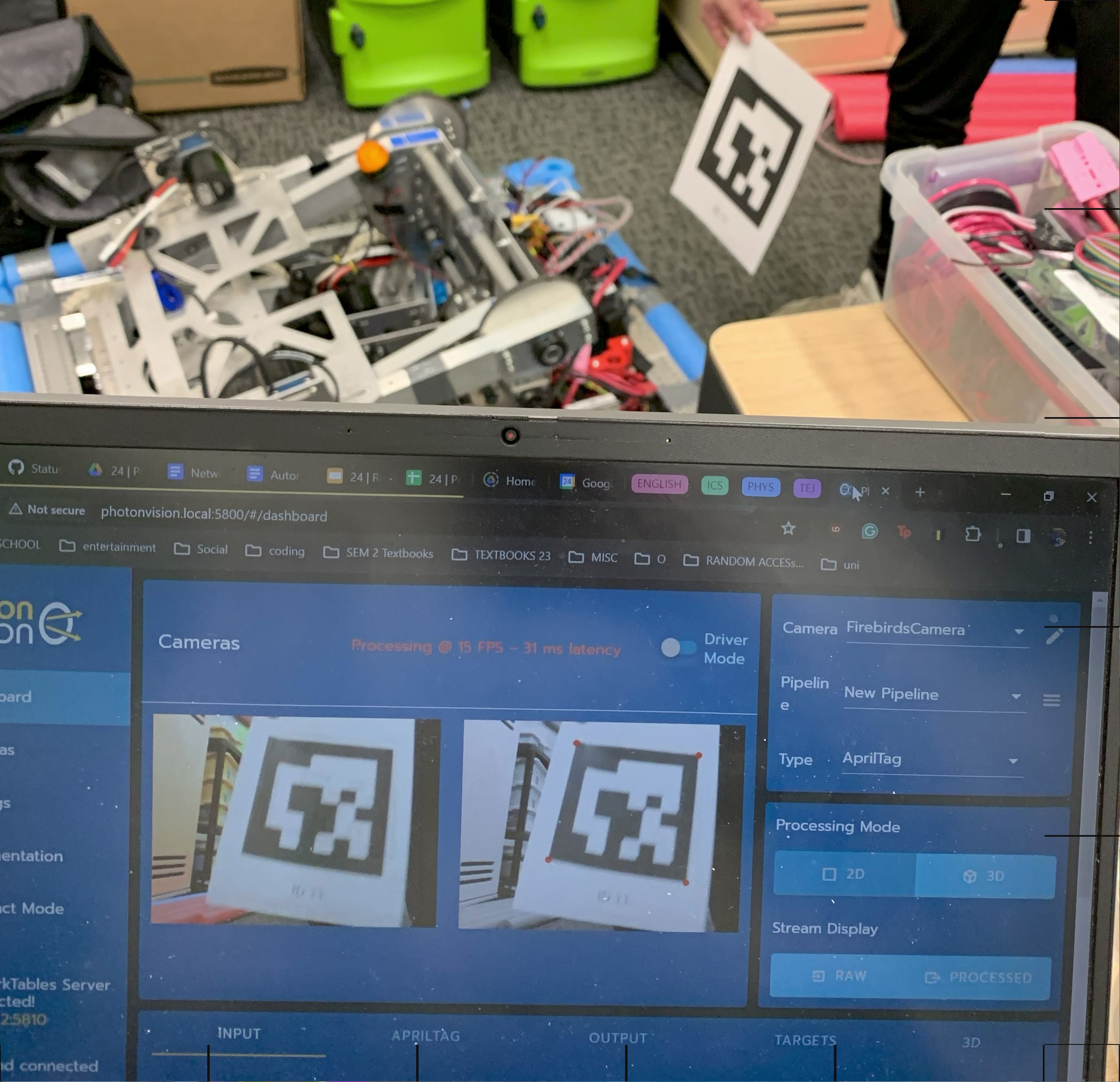

For this robot, we used a similar system as the previous year to get the arm to a specific angle, but we noticed that it only allowed our robot to shoot pieces from the closest distance from the target. A greater range of setpoints would be needed to shoot from any distance. To solve this problem, I formulated a system that would automatically adjust the arm level based on a distance reading from our camera.

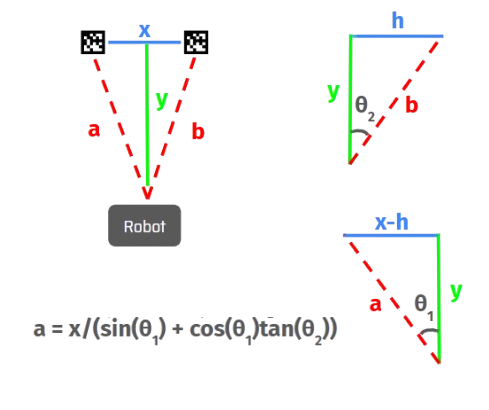

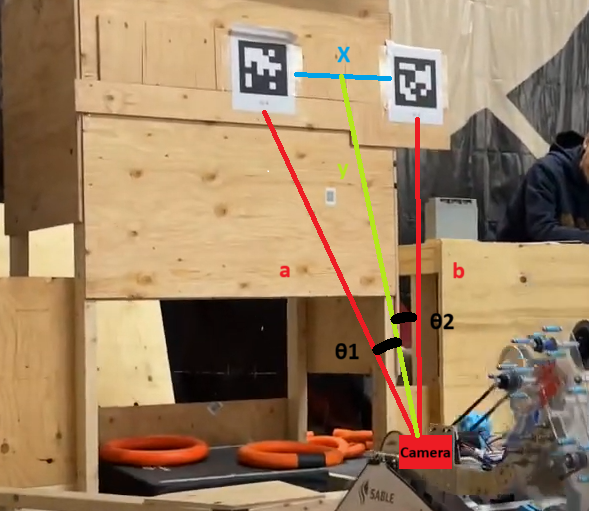

Our robot’s front facing camera was connected to a Raspberry Pi running a vision model called PhotonVision, which could return angles describing the robot’s orientation from certain targets. Using these angles, I developed a formula that triangulated the robot’s distance from these targets.

Auto Aiming:

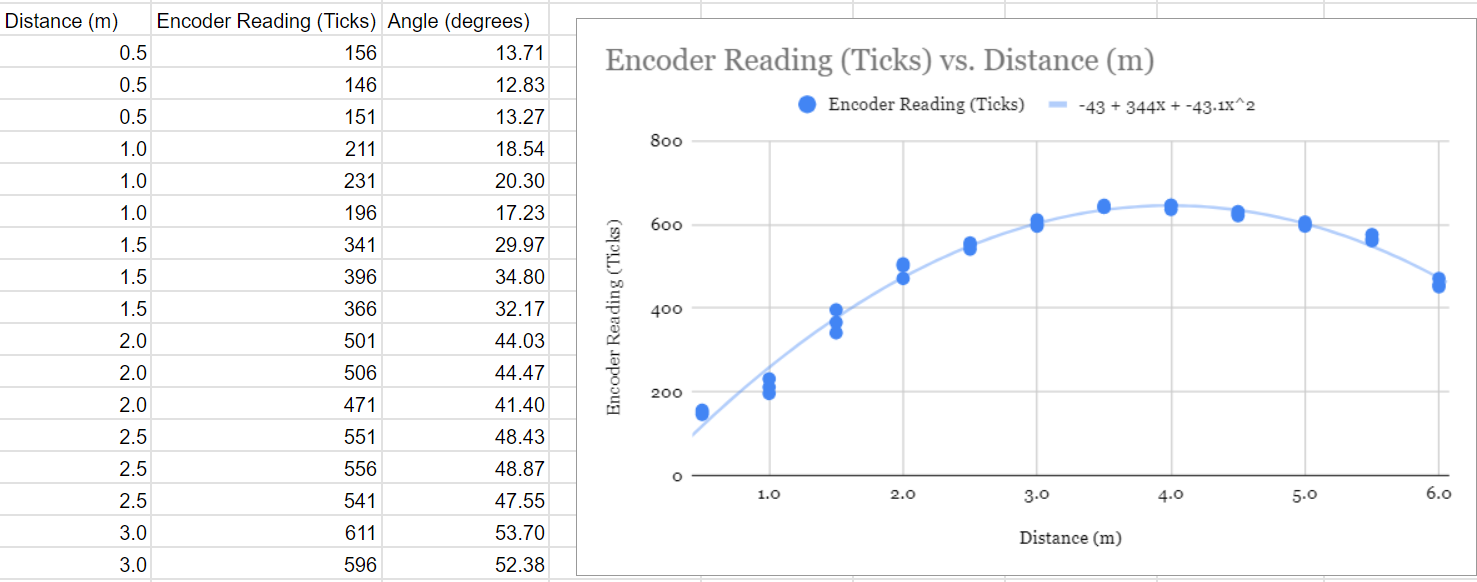

The distance value found from vision processing was used to automatically adjust the arm’s angle based on a function derived from test data to score game pieces from any distance.

Simulation, Odometry, Data Tables

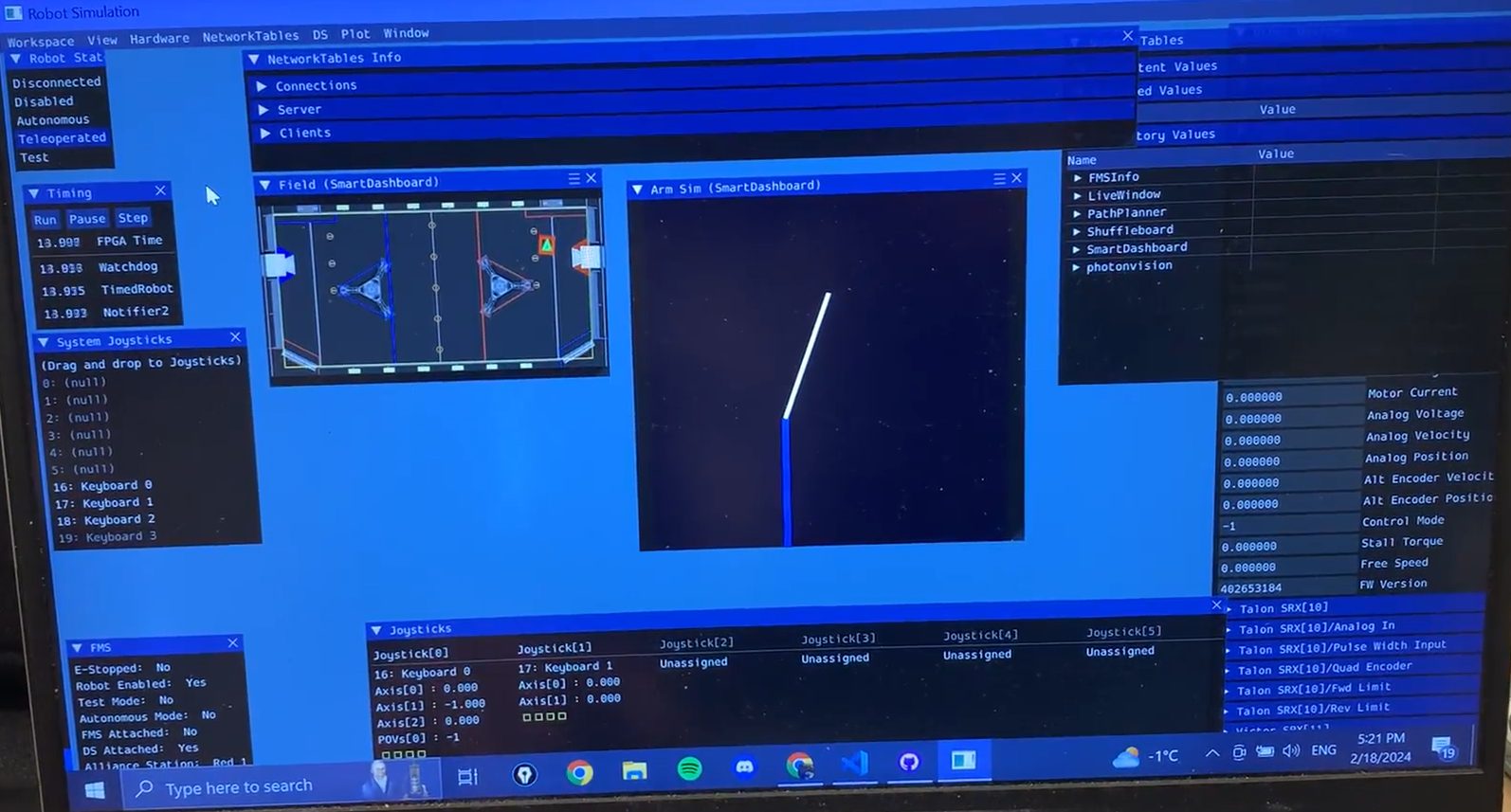

In 2023, our programming team had trouble with being bottlenecked by build time, so this year I attempted to use the simulation library to allow the programming team to work in parallel while the robot was being manufactured.

For the simulation of the drivetrain on the field, the motor encoders and a gyroscope are used with odometry to report the robot's live position on the field. Additionally, an arm simulation was put together to simulate and tune PID constants and velocity and acceleration gains.

We used simulation to make custom autonomous routines with path splines: